XML Preliminaries

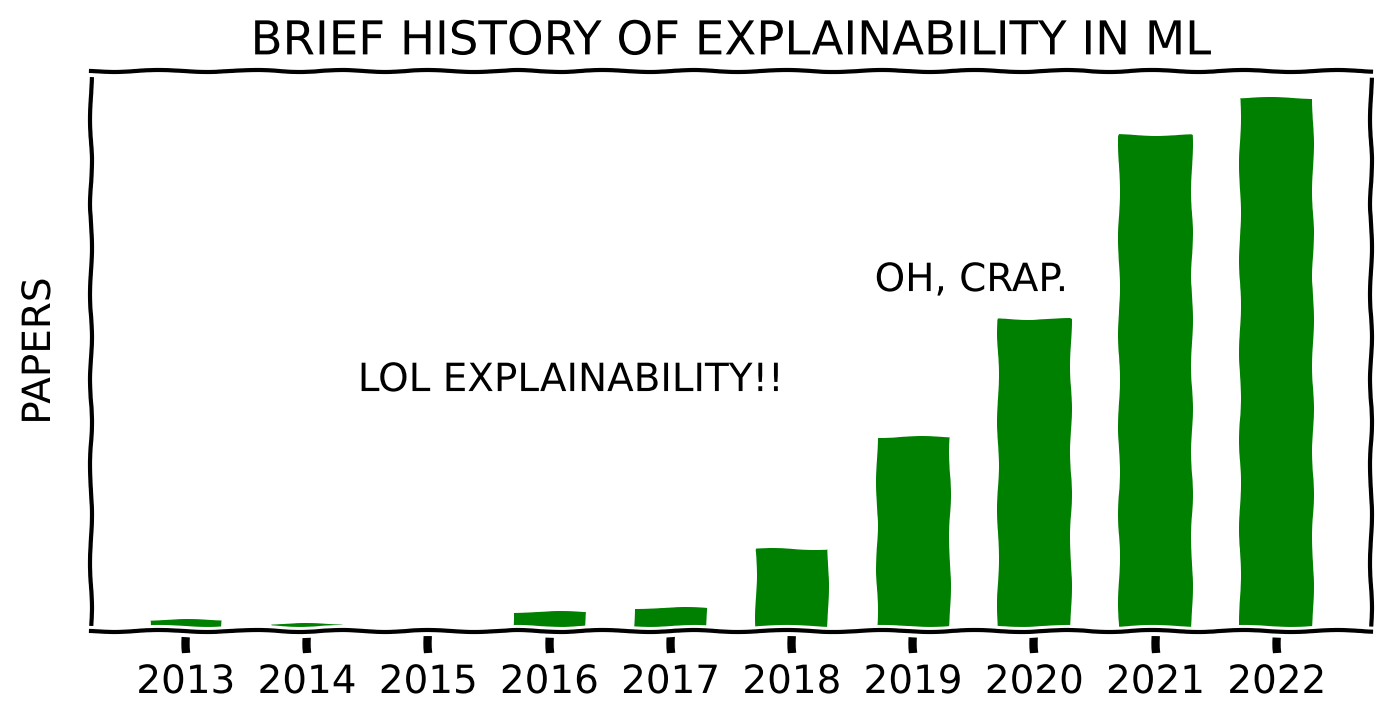

Brief History of Explainability

Expert Systems (1970s & 1980s)

Transparent Machine Learning Models

Rise of the Dark Side (Deep Neural Networks)

- No need to engineer features (by hand)

- High predictive power

- Black-box modelling

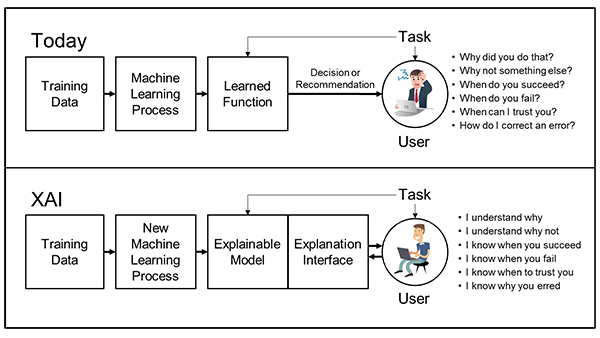

DARPA’s XAI Concept

Why We Need Explainability

Expectations Mismatch

We tend to request explanations mostly when a phenomenon disagrees with our expectations.

For example, an ML model behaves unlike we envisaged and outputs an unexpected prediction.

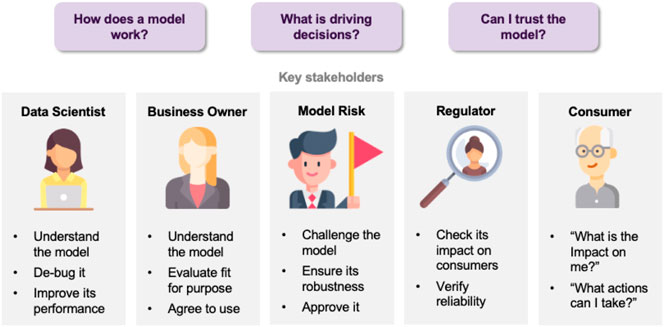

Stakeholders

Purpose or Role

- Fairness

- Privacy

- Reliability and Robustness

- Causality

- Trust

Trustworthiness / Reliability / Robustness / Causality

No silly mistakes & socially acceptable

Fairness

Does not discriminate & is not biased

Benefits

New knowledge

Aids in scientific discovery

Legislation

Does not break the law

- EU’s General Data Protection Regulation

- California Consumer Privacy Act

Debugging / Auditing

Identify modelling errors and mistakes

Human–AI co-operation

Help humans complete tasks

Drawbacks

Safety / Security

Abuse transparency to steal a (proprietary) model

Manipulation

Use transparency to game a system, e.g., credit scoring

Pitfalls

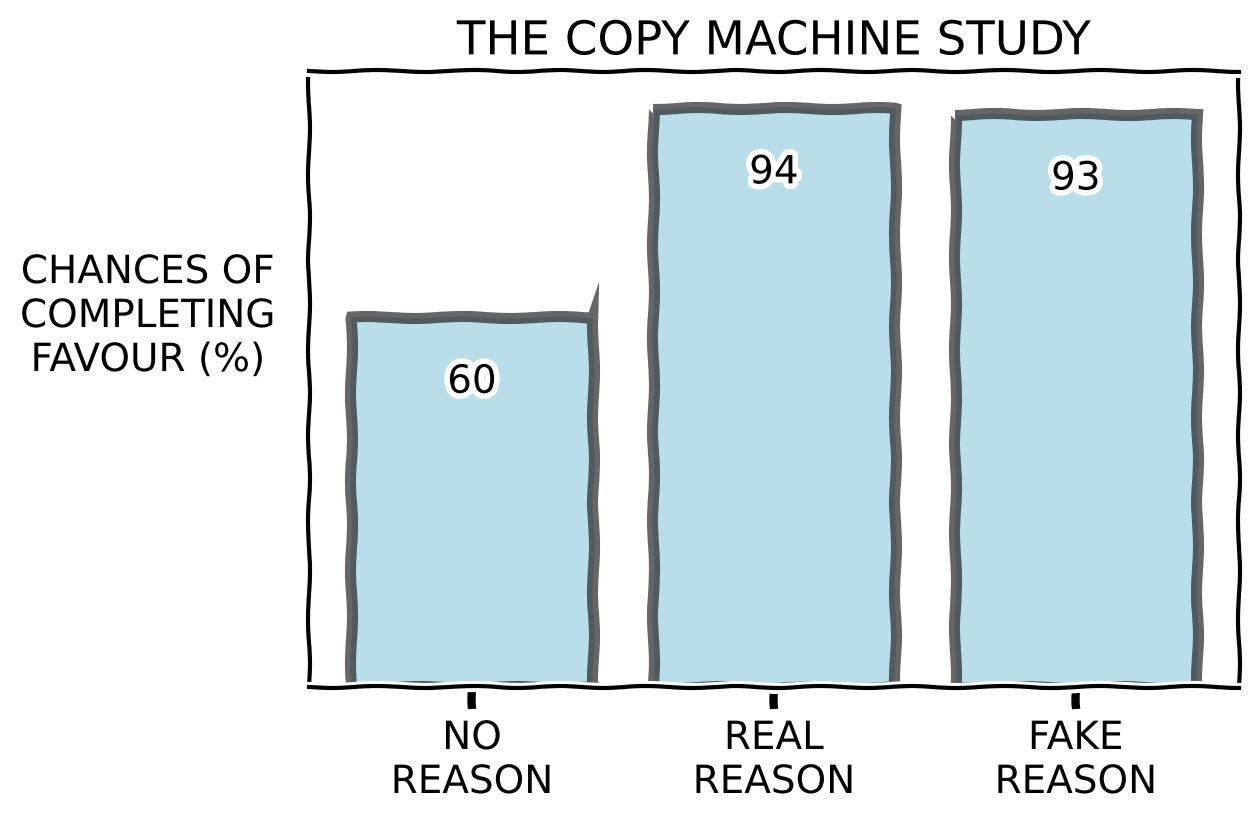

Copy machine study done by Langer, Blank, and Chanowitz (1978):

Explanation Types

Explainability Source

- ante-hoc – intrinsically transparent predictive models (transparency by design)

- post-hoc – derived from a pre-existing predictive models that may themselves be unintelligible (usually requires an additional explanatory modelling step)

Explanation Provenance

Ante-hoc does not necessarily entail explainable or human-understandable

- endogenous explanation – based on human-comprehensible concepts operated on by a transparent model

- exogenous explanation – based on human-comprehensible concepts constructed outside of the predictive model (by the additional modelling step)

Explanation Domain

Original domain

Transformed domain

Explanation Types

- model-based – derived from model internals

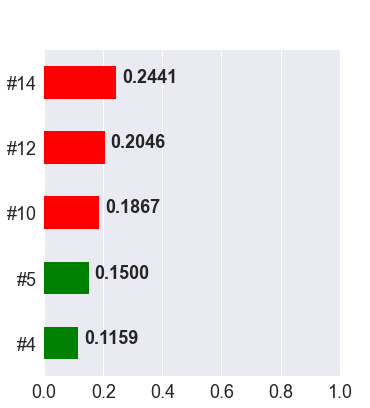

- feature-based – regarding importance or influence of data features

- instance-based – carried by rael or fictitious data point

- meta-explainers – one of the above, but not extracted directly from the predictive model being explained (using an additional explainability modelling step, e.g., surrogate)

Explanation Family

- associations between antecedent and consequent

- feature importance

- feature attribution / influence

- rules

- exemplars (prototypes & criticisms)

Explanation Family

contrasts and differences

- (non-causal) counterfactuals

i.e., contrastive statements - prototypes & criticisms

- (non-causal) counterfactuals

Explanation Family

causal mechanisms

- causal counterfactuals

- causal chains

- full causal model

Explanatory Medium

- (statistical / numerical) summarisation

- visualisation

- textualisation

- formal argumentation

Communication of Explanations

Static artefact

Interactive (explanatory) protocol

interactive interface- interactive explanation

Explainability Scope

| global | cohort | local | |

|---|---|---|---|

| data | a set of data | a subset of data | an instance |

| model | model space | model subspace | a point in model space |

| prediction | a set of predictions | a subset of predictions | a individual prediction |

- algorithmic explanation – the learning algorithm, not the resulting model; e.g., modelling assumptions, caveats, compatible data types, etc.

Explainability Target

Focused on a single class (technically limited)

implicit context

Why \(A\)? (…and not anything else, i.e., \(B \cup C \cup \ldots\))

explicit context

Why \(A\) and not \(B\)?

Multi-class explainability (Sokol and Flach 2020b)

If 🌧️, then \(A\); else if ☀️ & 🥶, then \(B\), else ☀️ & 🥵, then \(C\).

Important Developments

Where Is the Human? (circa 2017)

Humans and Explanations

- Human-centred perspective on explainability

- Infusion of explainability insights from social sciences

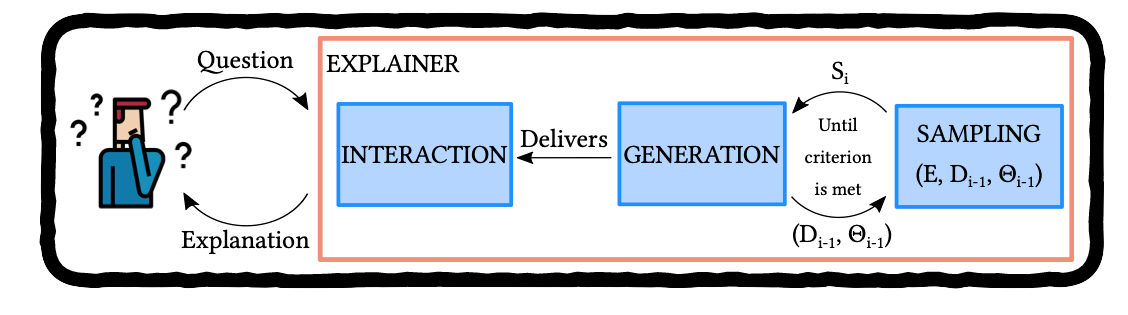

- Interactive dialogue (bi-directional explanatory process)

- Contrastive statements (e.g., counterfactual explanations)

Exploding Complexity (2019)

Ante-hoc vs. Post-hoc

Black Box + Post-hoc Explainer

- Chose a well-performing black-box model

- Use explainer that is

- post-hoc (can be retrofitted into pre-existing predictors)

- and possibly model-agnostic (works with any black box)

Caveat: The No Free Lunch Theorem

Post-hoc explainers have poor fidelity

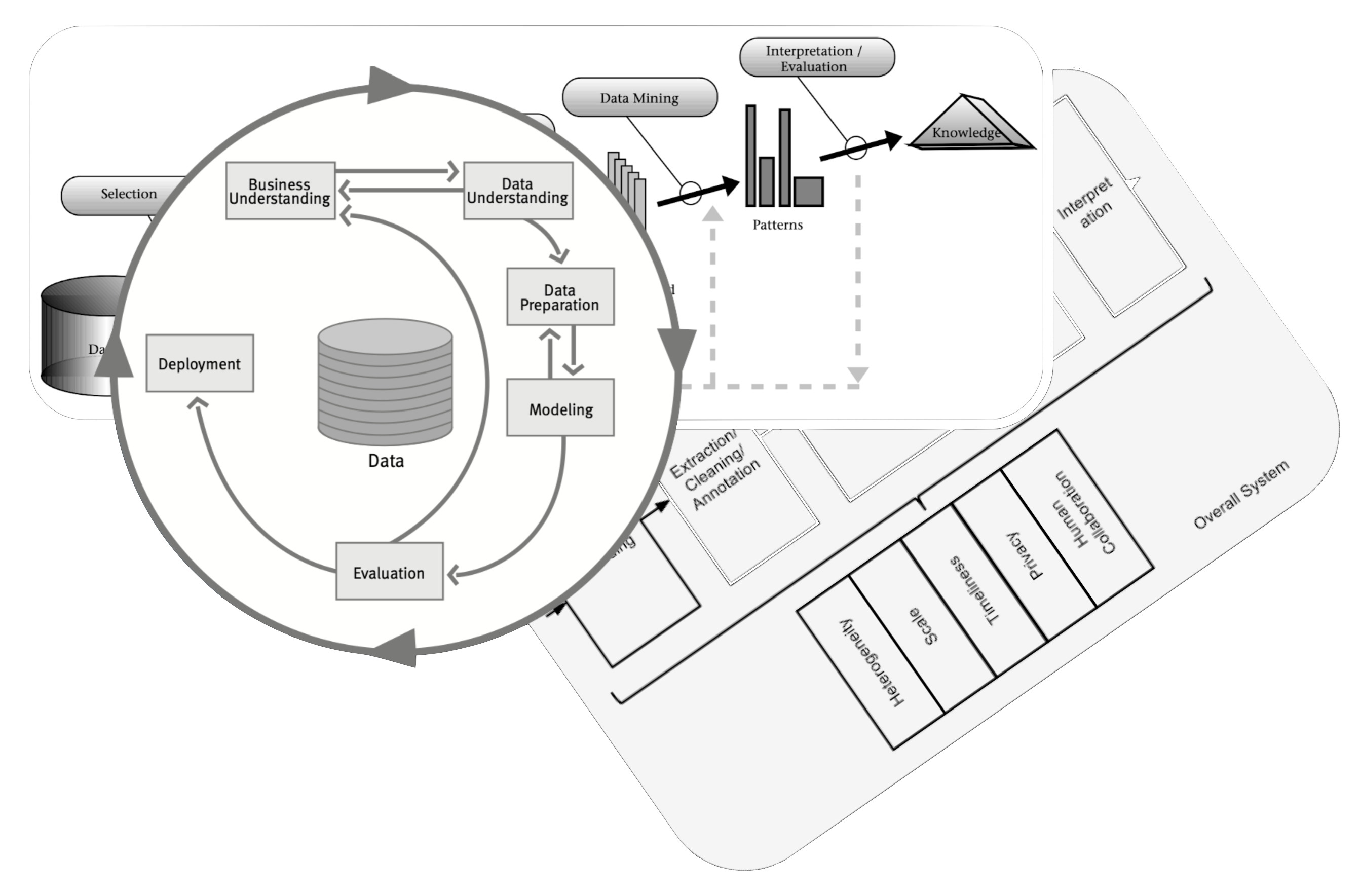

- Explainability needs a process similar to KDD, CRISP-DM or BigData

![Data process]()

- Focus on engineering informative features and inherently transparent models

It requires effort

XAI process

A generic eXplainable Artificial Intelligence process is beyond our reach at the moment

A generic eXplainable Artificial Intelligence process is beyond our reach at the moment

XAI Taxonomy spanning social and technical desiderata:

• Functional • Operational • Usability • Safety • Validation •

(Sokol and Flach, 2020. Explainability Fact Sheets: A Framework for Systematic Assessment of Explainable Approaches)Framework for black-box explainers

(Henin and Le Métayer, 2019. Towards a generic framework for black-box explanations of algorithmic decision systems)

![XAI process]()

Examples of Explanations

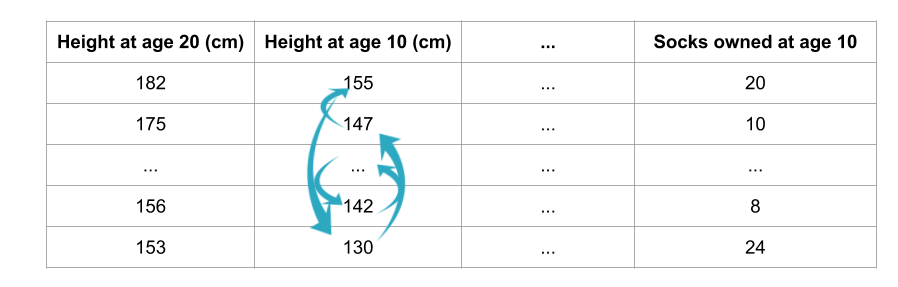

Permutation Feature Importance

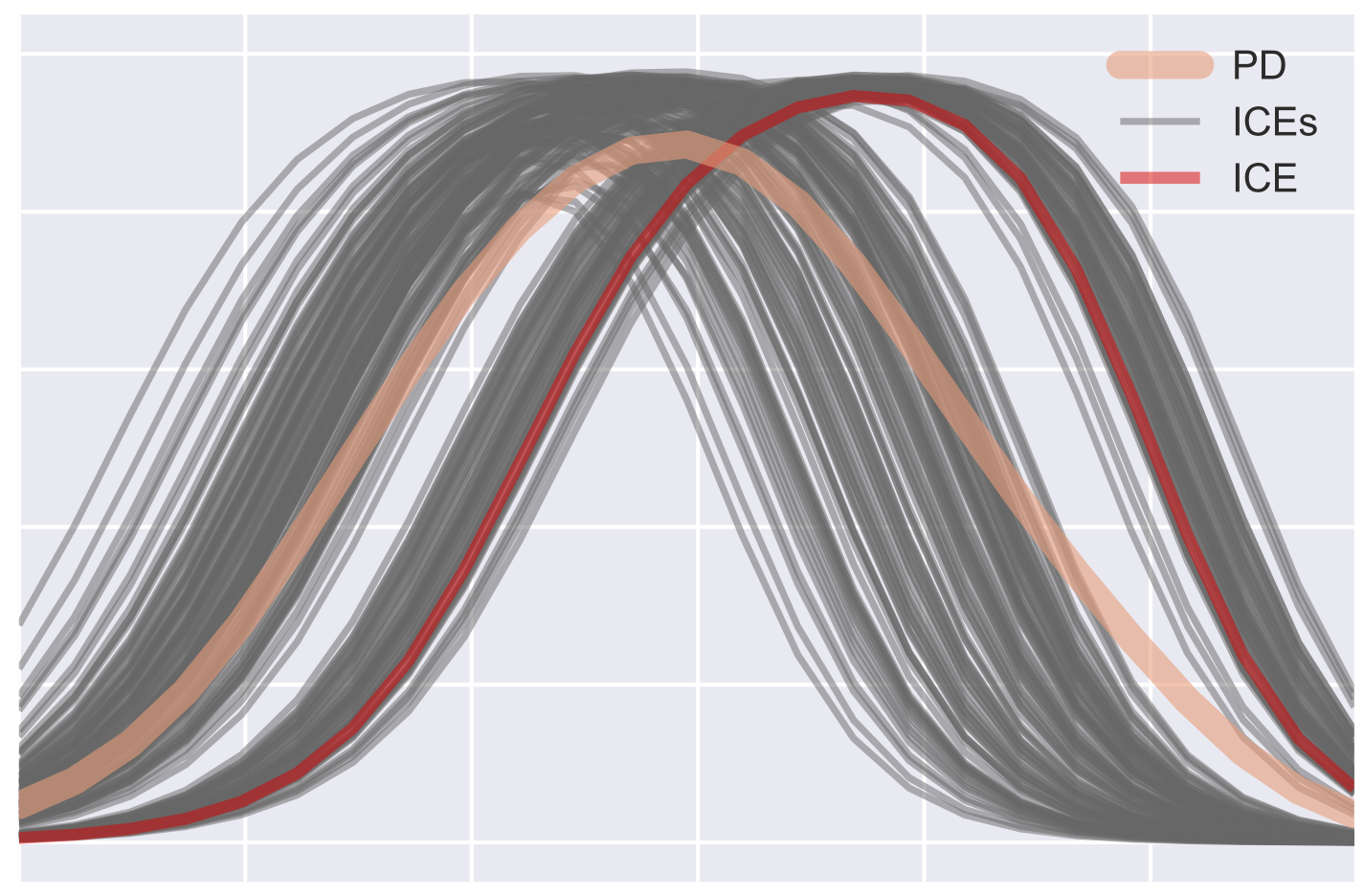

Individual Conditional Expectation & Partial Dependence

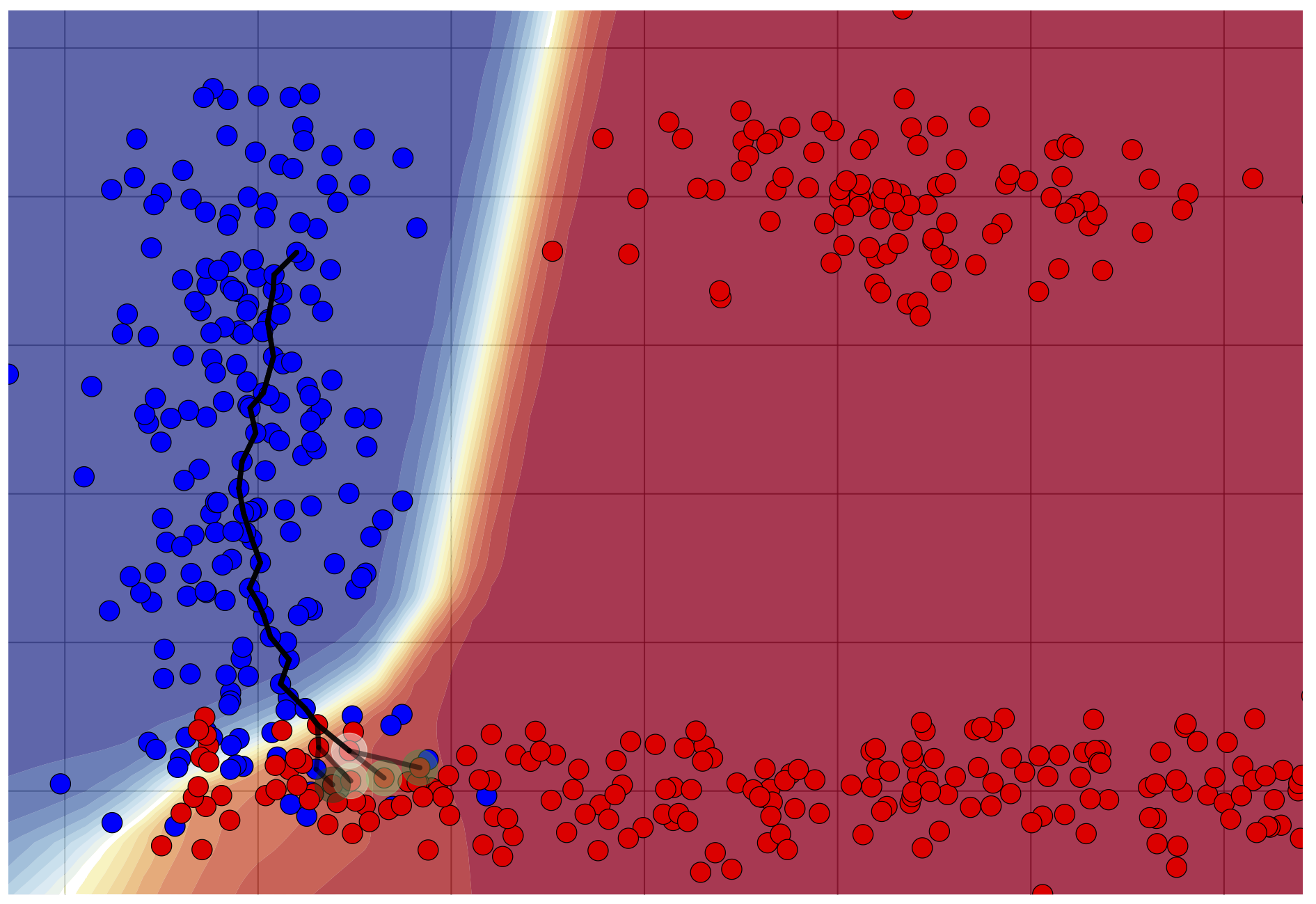

FACE Counterfactuals

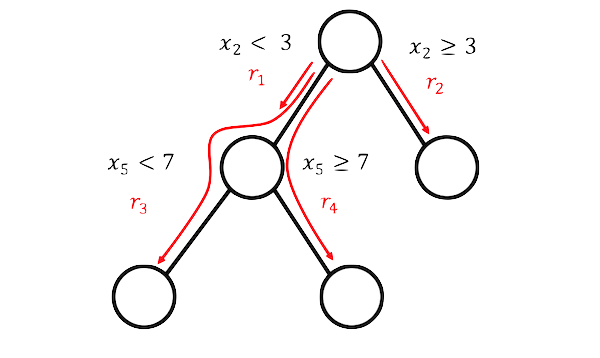

RuleFit

Useful Resources

📖 Books

- Survey of machine learning interpretability in form of an online book

- Overview of explanatory model analysis published as an online book

📝 Papers

- General introduction to interpretability (Sokol and Flach 2021)

- Introduction to human-centred explainability (Miller 2019)

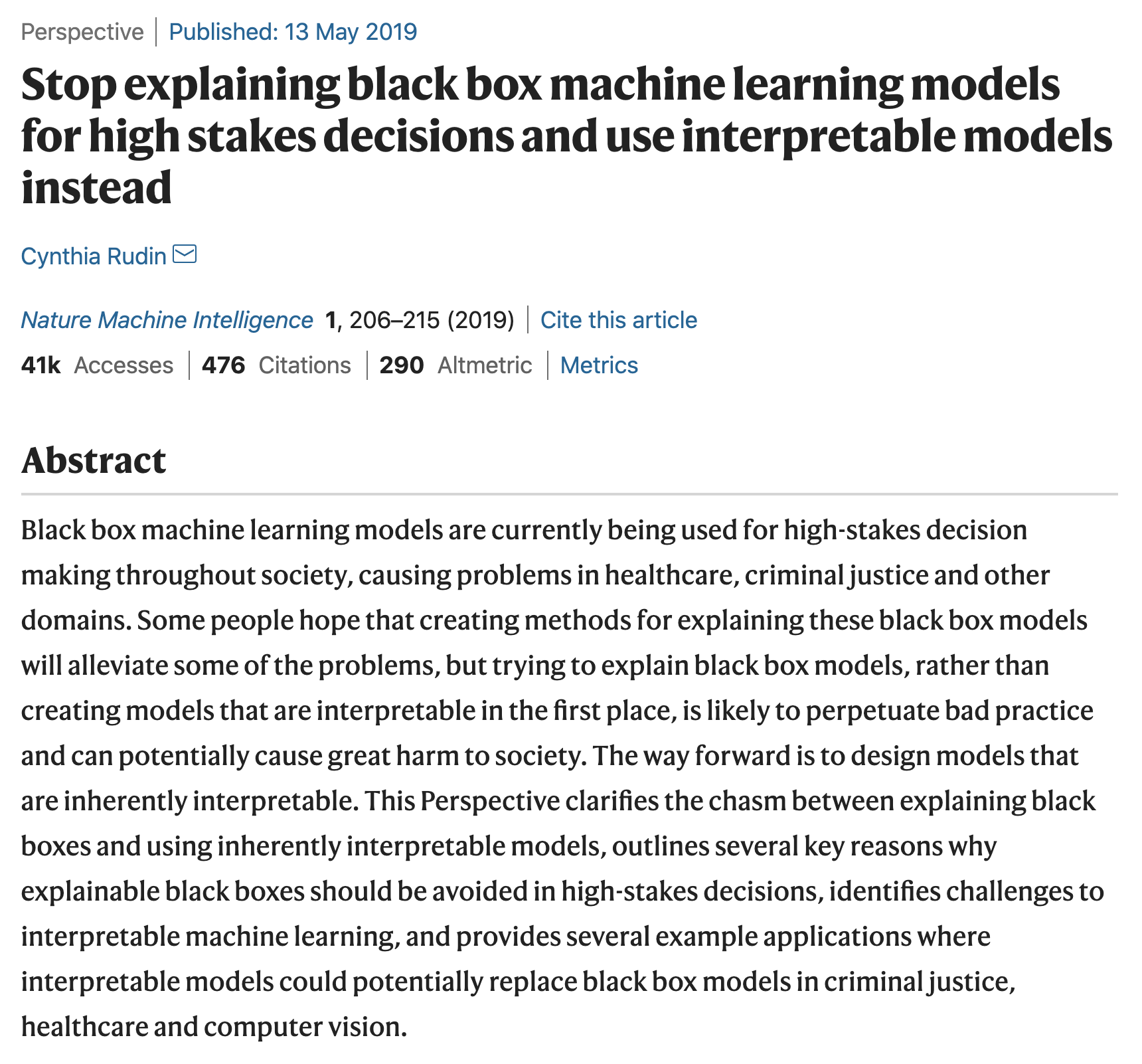

- Critique of post-hoc explainability (Rudin 2019)

- Survey of interpretability techniques (Guidotti et al. 2018)

- Taxonomy of explainability approaches (Sokol and Flach 2020a)

💽 Software

Wrap Up

Summary

- The landscape of explainability is fast-paced and complex

- Don’t expect universal solution

- The involvement of humans – as explainees – makes it all the more complicated

Bibliography

Questions